Robust and Fair Online Exams

How do you create a fair and robust online exam paper? Alison Hill and Nicholas Harmer share how they achieved this.

Authors: Alison M. Hill and Nicholas J. Harmer

1. What exactly is the shift in culture and/or organisational practice that you wish to highlight?

For the 2020/21 academic year, our previous one-hour invigilated examination changed to an open book, non-invigilated 24-hour examination. We were challenged to create a fair and robust online exam paper. High-stakes, time-pressured online assessments are an ideal environment for student collusion [1-4]. Some Universities have adopted proctoring software (e.g., RespondusLockdown)[5,6] and there have been reports of students using third-party helper sites (e.g., Chegg) or online chat groups[5]. The mainstream press have widely reported students cheating in online exams [7-12].

Our previous exam comprised two sections. The first is a data handling section worth 40% of the exam. This section is based on a module laboratory class. Students perform a selection of calculations using unseen data. A Smart Worksheet (developed with Learning Sciences)[13] was introduced in 2018/19 to upskill students in data processing and improve confidence in tackling unseen problems. Before the pandemic, the Smart Worksheet was used formatively with staff support. In 2020/21 this was used online only. Students were provided with a pre-recorded instructional video and a selection of historical datasets. The instant feedback provided by the Smart Worksheet was invaluable and additional online synchronous sessions provided further support for the students.

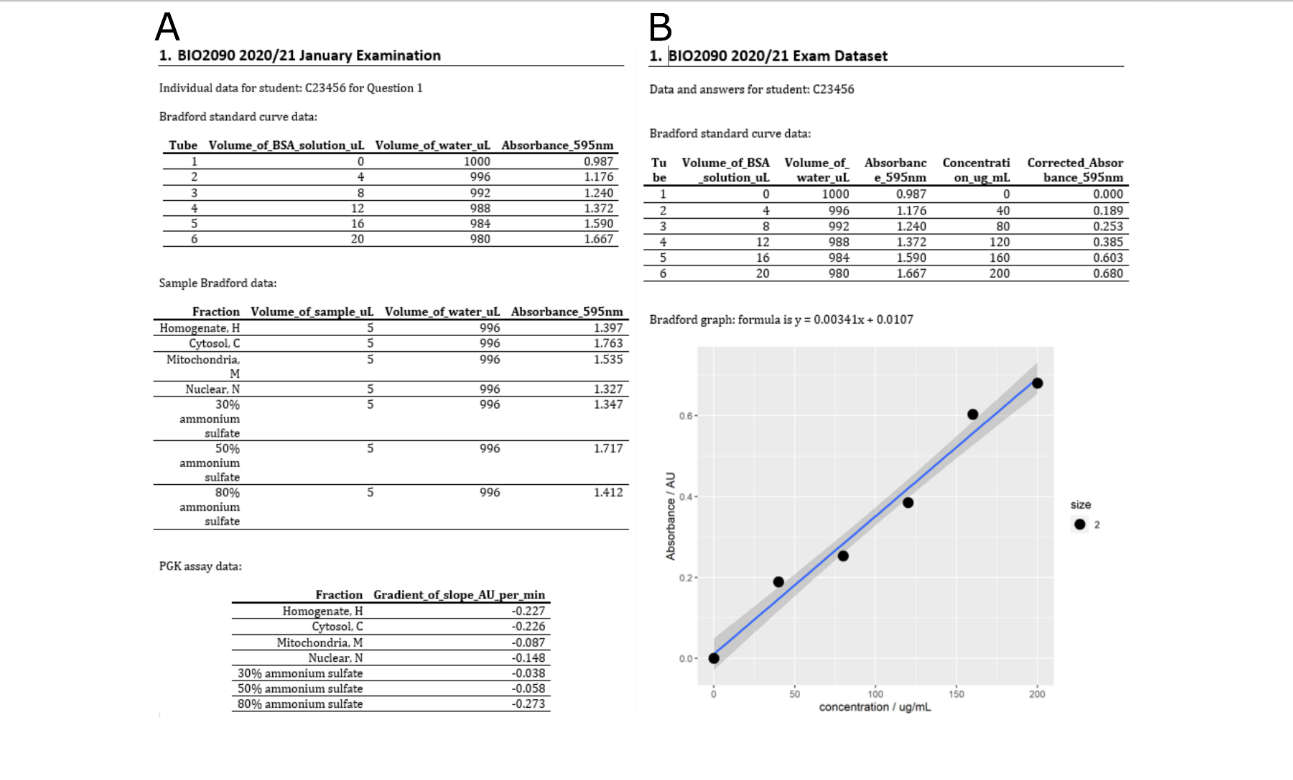

Examinations like ours, with a single correct answer, are at especially high risk for students checking answers with each other or working collaboratively [1]. Our solution was to generate unique datasets based on student-generated historical data [14]. Historical datasets were used to provide a range of reasonable student-derived values for the experimental results. We used these to develop a model (using R) of the experiment with randomized, interpretable data within these reasonable limits; our code generated 60 unique data sets and corresponding answer files (which included all answers and workings, including plotted data; figure 1). Students downloaded the exam paper and their unique dataset. This ensures that every student had their own dataset to process, reducing the incentive to cheat or collaborate. All students used the correct dataset and there was no evidence of collusion.

What did ‘working well’ look like?

Using individual data sets has been an important innovation to ensure a fair and robust examination in the online environment. The students reported that they preferred a 24-hour open book online exam to restricted time examinations. This presents challenges to academics to ensure assessment is authentic and meaningful, and that the work is the student’s own and not the result of collusion or cheating. Such assessment must remain compliant with University procedures and GDPR.

Using smart worksheets to practice [data processing] to prepare for these assessments allowed rapid and personalised feedback’. Student feedback 2021

‘The use of individual data sets for assessments was an excellent way of ensuring the work was fair for all students as collaboration was not possible.’ Student feedback 2021.

‘The innovative use of R-generated unique datasets is an exemplar of how robust assessments can be maintained in the era of online open-book exams without compromising the intended learning outcomes.’ Director of Education, Dr. Alan Brown

‘When the possibility of using individual data was postulated, I agreed that an alternative method to combat plagiarism was needed. The style of question where there is a single correct answer has been difficult to convert to the online, non-invigilated, open book style of examination. Although the majority of students have abided by the regulations, the temptation for collusion/plagiarism is increased when there is only a single correct answer.

The creation of individual data sets was designed to prevent plagiarism and as Examinations Officer I was keen to implement this approach as soon as possible. The method for uploading exam papers to our Exam portal enabled us to create a folder with individual data sets itemised by candidate number. This enabled us to keep within GDPR guidelines, but also was a simple effective way for us to upload the data and for students to easily and quickly find their data during their exam period.’ Biosciences Examinations Officer, Dr Katie Solomon

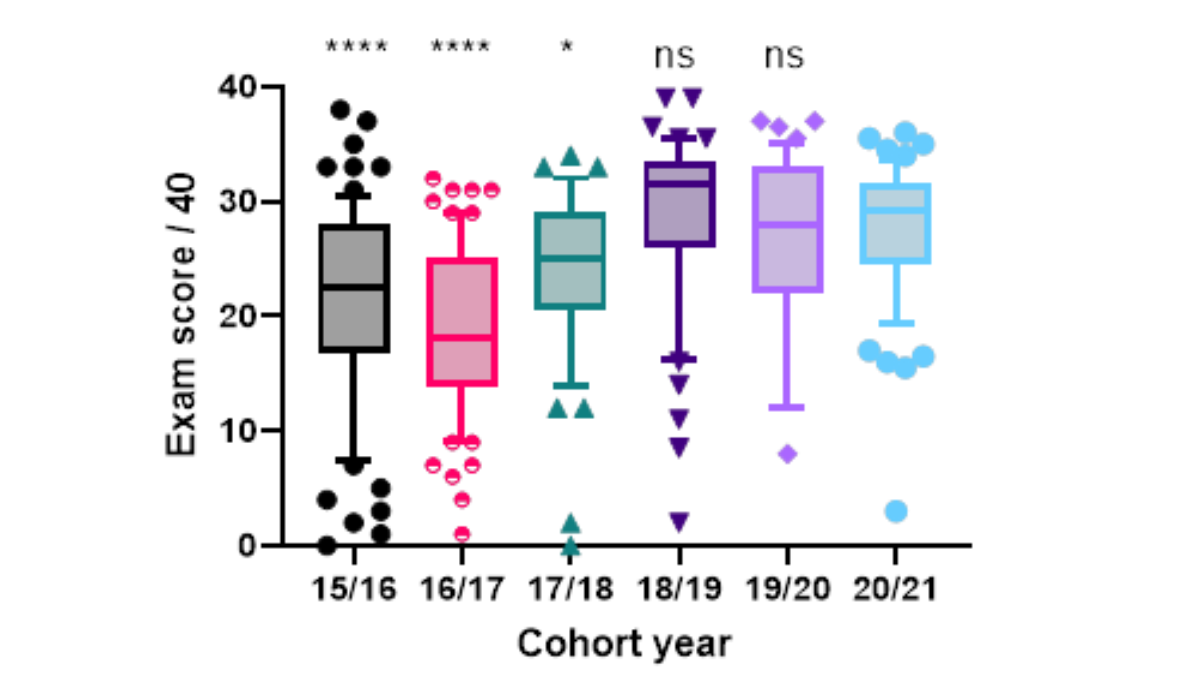

Student performance showed no statistical difference to the previous two years, despite students having access to their notes and the internet, and less time pressure (figure 2). The mean and median marks for the data section of the exam remained the same as the previous two years. In contrast, the effect of the Smart Worksheet that we introduced in 2018/19 to significantly improve students’ data handling skills is clearly noticeable on student marks (figure 4; p<0.05, Kruskal–Wallis test). This tool is switched off for the exam!

Our approach has resulted in increased marking times for this section of the exam. Efficiency gains from marking a question with a single numerical answer were not available. Staff fed back that this was a price worth paying to ensure that the work marked was the student’s own. We are now investigating ways of automating the marking. We hope to trial this on another second year Biochemistry exam in May 2022.

How could this practice be spread?

We have presented this work at three conferences and written a paper for Journal of Chemical Education [14]. This is open access and includes annotated R scripts to facilitate adoption of our method; we have also published the R files [22]. The University released a press statement that has resulted in a University website news story [15], as well as six other news outlets16-21 including the Times Higher Education Supplement.

We are intending to use this methodology in another second year Biochemistry exam in May 2022 with automated marking. If that pilot is successful, we will be ready to offer this to other academics within Exeter and the UK/internationally. Our method generates as many datasets as are required with no extra effort once the code is written; the deployment of unique datasets more widely does depend on automated marking as that will be crucial for adoption in classes with large cohorts.

References

1.Dietrich, N., Kentheswaran K., Ahmadi, A., Teychené, J., Bessière, Y., Alfenore, S., Laborie, S., Bastoul, D., Loubière, K., Guigui., C., Sperandio, M., Barna, L., Paul, E., Cabassud, C., Liné, A., and Hébrard, G., Attempts, Successes, and failures of distance learning in the time of COVID-19, J. Chem. Educ., 2020, 97, 2448-2457; DOI: 10.1021/acs.jchemed.0c00717.

2.Norris, M. University online cheating – how to mitigate the damage. Research in Higher Education Journal, 2019, 37(November). aabri.com/manuscripts/193052.pdf (accessed 2021-11-01).

3.Bilen, E., and Matros, A. Online cheating amid COVID-19, J. Econ. Behav. Organ., 2021, 182, 196-211; DOI: 10.1016/j.jebo.2020.12.004.

4.Dendir, S., and Maxwell, R. S. Cheating in online courses: Evidence from online proctoring. Comput. Hum. Behav., 2020, 2, 100033; DOI: 10.1016/j.chbr.2020.100033

5.Gonzalez, C., and Knecht, L. D., Strategies Employed in Transitioning multi-instructor, multisection introductory general and organic chemistry courses from face-to-face to online learning, J. Chem. Educ., 2020, 97, 2871-2877; DOI: 10.1021/acs.jchemed.0c00670.

6.Howitz, W. J., Guaglianone, G., and King, S.M., Converting an organic chemistry course to an online format in two weeks: Design, implementation, and reflection, J. Chem. Educ., 2020, 97, 2581-2589; DOI: 10.1021/acs.jchemed.0c00809.

7.Akhabau, I. ‘Why more university students are cheating amid the Covid-19 pandemic’, https://metro.co.uk/2021/09/11/covid-19-cheats-more-university-students-tempted-by-at-home-exams-15237372/ (date accessed 7 Jan 2022).

8.New Zealand Herald, ‘University of Auckland cheating claims: ‘Prolific’ abuse of online exams by students on group calls’, https://www.nzherald.co.nz/nz/university-of-auckland-cheating-claims-prolific-abuse-of-online-exams-by-students-on-group-calls/PFV5YZ7DNWM5B6LFUWTEKJEEJ4/ (date accessed 7 Jan 2022)

9.Somerville, E. ‘Entire student houses caught cheating in online university exams’, https://www.telegraph.co.uk/news/2021/06/28/entire-student-houses-caught-cheating-online-university-exams/ (date accessed 7 Jan 2022).

10.Hardy, J. ‘University students offer to pay tutors to take their online exams during pandemic’, https://www.telegraph.co.uk/news/2021/06/06/university-students-enlist-private-tutors-take-online-exams/ (date accessed 7 Jan 2022).

11.McKie, A. ‘Students ‘twice as likely to cheat’ in online exams’, https://www.timeshighereducation.com/news/students-twice-likely-cheat-online-exams (date accessed 7 Jan 2022)

12.Baker, N. ‘TU Dublin had 445 cases of alleged cheating in online exams during pandemic’, https://www.irishexaminer.com/news/arid-40777299.html (date accessed 6 January 2022).

13.Learning Sciences, Learning Sciences Smart Worksheets, https://learningscience.co.uk/smart-worksheets, (accessed 7 Jan 2022).

14.Harmer, N. J., and Hill, A. M., ‘Unique Data sets and Bespoke Laboratory Videos: Teaching and Assessing of Experimental Methods and Data Analysis in a Pandemic’, 2021, J. Chem. Educ., 98: 4094-4100; DOI: 10.1021/acs.jchemed.1c00853.

15.University of Exeter, https://www.exeter.ac.uk/news/research/title_890418_en.html (date accessed 7 Jan 2022).

16.Grove, J., ‘ Bespoke Robot-Written Exams to Curb Student Cheating’, https://www.timeshighereducation.com/news/bespoke-robot-written-exams-curb-student-cheating?fbclid=IwAR3QTxRGIXbs6Ksdi2wRlajAKoNzJLWURKSFSwm8_j9QfMAsqG9PQ8xJ2L4 (date accessed 6 Jan 2022).

17.Grove, J., 2022, ‘Could Custom Exams Prevent Cheating?’ https://www.insidehighered.com/news/2022/01/06/british-university-tries-custom-exam-reduce-cheating (date accessed 7 Jan 2022).

18. Mirage news, https://www.miragenews.com/unique-data-creates-fair-and-robust-online-exams-693956/ (date accessed 7 Jan 2022).

19. org, https://phys.org/news/2021-12-unique-fair-robust-online-exams.html (date accessed 7 Jan 2022).

20. Brogan, T., Education Technology, https://edtechnology.co.uk/e-learning/digital-learning-pioneers-develop-fair-and-robust-online-exam-method/ (date accessed 7 Jan 2022).

21. Abraham, J., Eminetra, https://eminetra.co.uk/digital-learning-pioneers-develop-fair-and-robust-online-test-methods/870121/ https://phys.org/news/2021-12-unique-fair-robust-online-exams.html (date accessed 7 Jan 2022).

22. Harmer, N.J., https://github.com/njharmer/Student-specific-data.

Join the conversation at the Digital Culture Forum